Setting up AutoGPT¶

📋 Requirements¶

Choose an environment to run AutoGPT in (pick one):

- Docker (recommended)

- Python 3.10 or later (instructions: for Windows)

- VSCode + devcontainer

🗝️ Getting an API key¶

Get your OpenAI API key from: https://platform.openai.com/account/api-keys.

Attention

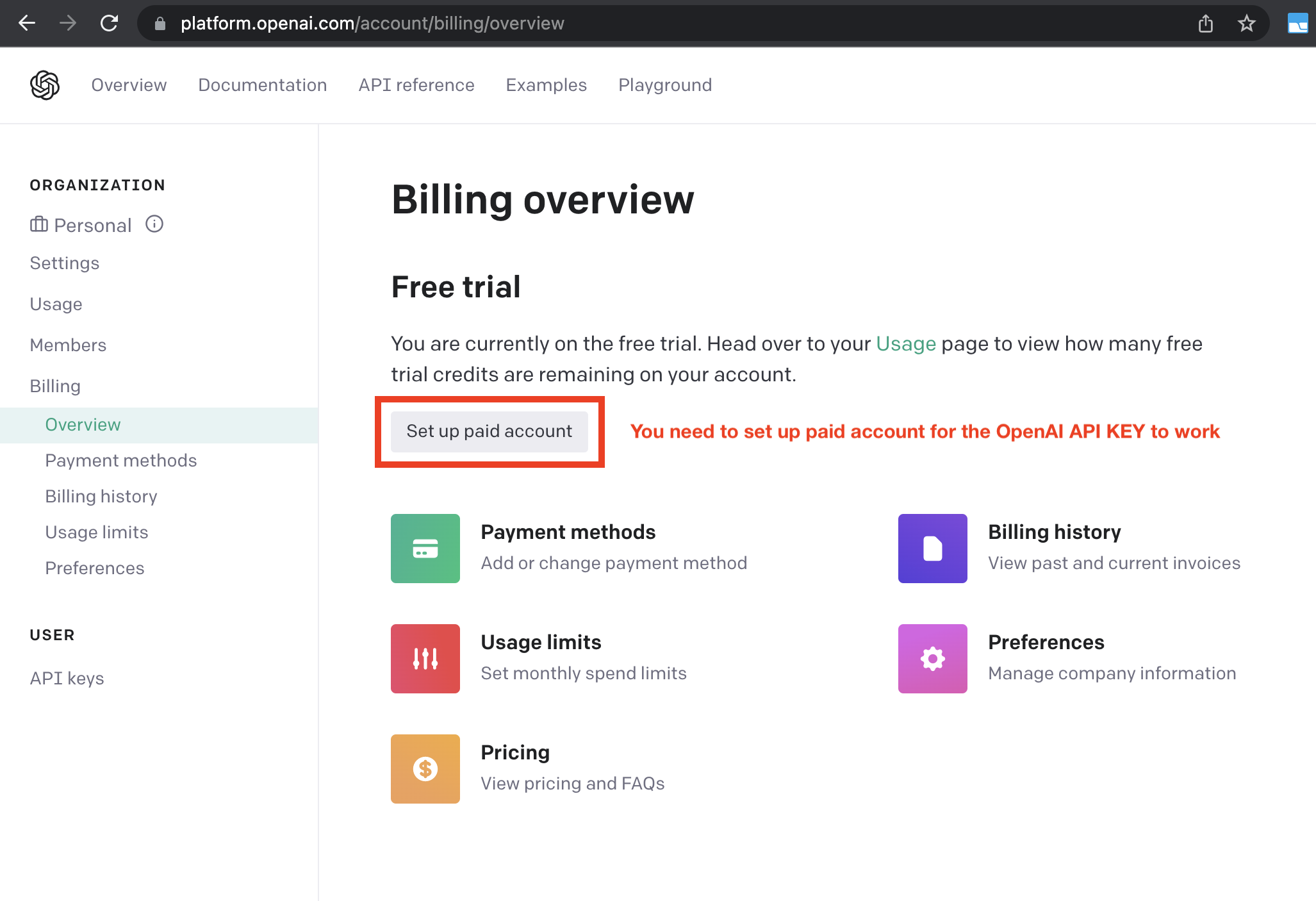

To use the OpenAI API with AutoGPT, we strongly recommend setting up billing (AKA paid account). Free accounts are limited to 3 API calls per minute, which can cause the application to crash.

You can set up a paid account at Manage account > Billing > Overview.

Important

It's highly recommended that you keep track of your API costs on the Usage page. You can also set limits on how much you spend on the Usage limits page.

Setting up AutoGPT¶

Set up with Docker¶

- Make sure you have Docker installed, see requirements

-

Create a project directory for AutoGPT

mkdir AutoGPT cd AutoGPT -

In the project directory, create a file called

docker-compose.ymlwith the following contents:version: "3.9" services: auto-gpt: image: significantgravitas/auto-gpt env_file: - .env profiles: ["exclude-from-up"] volumes: - ./auto_gpt_workspace:/app/auto_gpt_workspace - ./data:/app/data ## allow auto-gpt to write logs to disk - ./logs:/app/logs ## uncomment following lines if you want to make use of these files ## you must have them existing in the same folder as this docker-compose.yml #- type: bind # source: ./azure.yaml # target: /app/azure.yaml #- type: bind # source: ./ai_settings.yaml # target: /app/ai_settings.yaml -

Create the necessary configuration files. If needed, you can find templates in the repository.

-

Pull the latest image from Docker Hub

docker pull significantgravitas/auto-gpt -

Continue to Run with Docker

Docker only supports headless browsing

AutoGPT uses a browser in headless mode by default: HEADLESS_BROWSER=True.

Please do not change this setting in combination with Docker, or AutoGPT will crash.

Set up with Git¶

Important

Make sure you have Git installed for your OS.

Executing commands

To execute the given commands, open a CMD, Bash, or Powershell window.

On Windows: press Win+X and pick Terminal, or Win+R and enter cmd

-

Clone the repository

git clone https://github.com/Significant-Gravitas/AutoGPT.git -

Navigate to the directory where you downloaded the repository

cd AutoGPT/autogpts/autogpt

Set up without Git/Docker¶

Warning

We recommend to use Git or Docker, to make updating easier. Also note that some features such as Python execution will only work inside docker for security reasons.

- Download

Source code (zip)from the latest release - Extract the zip-file into a folder

Configuration¶

- Find the file named

.env.templatein the mainAuto-GPTfolder. This file may be hidden by default in some operating systems due to the dot prefix. To reveal hidden files, follow the instructions for your specific operating system: Windows, macOS. - Create a copy of

.env.templateand call it.env; if you're already in a command prompt/terminal window:cp .env.template .env. - Open the

.envfile in a text editor. - Find the line that says

OPENAI_API_KEY=. - After the

=, enter your unique OpenAI API Key without any quotes or spaces. -

Enter any other API keys or tokens for services you would like to use.

Note

To activate and adjust a setting, remove the

#prefix. -

Save and close the

.envfile.

Using a GPT Azure-instance

If you want to use GPT on an Azure instance, set USE_AZURE to True and

make an Azure configuration file:

- Rename

azure.yaml.templatetoazure.yamland provide the relevantazure_api_base,azure_api_versionand all the deployment IDs for the relevant models in theazure_model_mapsection:fast_llm_deployment_id: your gpt-3.5-turbo or gpt-4 deployment IDsmart_llm_deployment_id: your gpt-4 deployment IDembedding_model_deployment_id: your text-embedding-ada-002 v2 deployment ID

Example:

# Please specify all of these values as double-quoted strings

# Replace string in angled brackets (<>) to your own deployment Name

azure_model_map:

fast_llm_deployment_id: "<auto-gpt-deployment>"

...

Details can be found in the openai-python docs, and in the Azure OpenAI docs for the embedding model. If you're on Windows you may need to install an MSVC library.

Running AutoGPT¶

Run with Docker¶

Easiest is to use docker compose.

Important: Docker Compose version 1.29.0 or later is required to use version 3.9 of the Compose file format. You can check the version of Docker Compose installed on your system by running the following command:

docker compose version

This will display the version of Docker Compose that is currently installed on your system.

If you need to upgrade Docker Compose to a newer version, you can follow the installation instructions in the Docker documentation: https://docs.docker.com/compose/install/

Once you have a recent version of Docker Compose, run the commands below in your AutoGPT folder.

-

Build the image. If you have pulled the image from Docker Hub, skip this step (NOTE: You will need to do this if you are modifying requirements.txt to add/remove dependencies like Python libs/frameworks)

docker compose build auto-gpt -

Run AutoGPT

docker compose run --rm auto-gptBy default, this will also start and attach a Redis memory backend. If you do not want this, comment or remove the

depends: - redisandredis:sections fromdocker-compose.yml.For related settings, see Memory > Redis setup.

You can pass extra arguments, e.g. running with --gpt3only and --continuous:

docker compose run --rm auto-gpt --gpt3only --continuous

If you dare, you can also build and run it with "vanilla" docker commands:

docker build -t auto-gpt .

docker run -it --env-file=.env -v $PWD:/app auto-gpt

docker run -it --env-file=.env -v $PWD:/app --rm auto-gpt --gpt3only --continuous

Run with Dev Container¶

-

Install the Remote - Containers extension in VS Code.

-

Open command palette with F1 and type

Dev Containers: Open Folder in Container. -

Run

./run.sh.

Run without Docker¶

Create a Virtual Environment¶

Create a virtual environment to run in.

python -m venv .venv

source .venv/bin/activate

pip3 install --upgrade pip

Warning

Due to security reasons, certain features (like Python execution) will by default be disabled when running without docker. So, even if you want to run the program outside a docker container, you currently still need docker to actually run scripts.

Simply run the startup script in your terminal. This will install any necessary Python packages and launch AutoGPT.

-

On Linux/MacOS:

./run.sh -

On Windows:

.\run.bat

If this gives errors, make sure you have a compatible Python version installed. See also the requirements.